When OpenAI's ChatGPT went mainstream in November of 2022, our internal Slack channels pinged with haikus and short stories, bizarre prompt engineering, and lots of other impractical, fun content. On a more serious note, we also put ChatGPT’s capabilities to the test as a business tool — we asked it to write a strategic priority brief for a company like LogicGate and it did … a pretty darn good job.

It was clear to us that generative AI would fundamentally change how the world develops and interacts with technology. That got us thinking about the ways we could leverage AI models and systems to improve not only our own organization, but also enable customers to better adopt, govern, and manage AI technologies both in and outside of the Risk Cloud platform.

Balancing Risks and Rewards with Responsible AI

Despite our excitement, we also fully understood the concerns our clients, their customers, and other stakeholders had around adopting this rapidly evolving technology. Even today, it’s changing and advancing just as quickly as it appeared, and we’ve already seen the horror stories that have come out of improper usage of large language learning models like ChatGPT.

When we initially asked our customers and Customer Advisory Board members to weigh in on how they felt about adopting AI-powered technology within their organization, much of the conversation centered around data security and privacy implications. This is an extraordinarily important consideration for any organization that handles sensitive data — which, these days, is almost every organization.

AI models survive and grow on a healthy diet of data. Use of this technology requires organizations to feed it with their own, and in some cases, their customers' information. Any organization looking to benefit from the use of AI needs to understand why and how data is processed while also complying with customer and regulatory privacy requirements.

While using third parties like Amazon Web Services, Google Cloud, and Microsoft Azure to process data is not a new concept for tech companies, organizations around the world are naturally nervous about the uncertainty swirling around artificial intelligence and machine learning. Striking the right balance between AI risks and AI rewards requires a new level of visibility and collaboration across the extended enterprise when compared to technology advancements of the past.

Moving Fast and Safe with AI

All of this means that harnessing the vast potential of AI, while also avoiding its multitude of pitfalls, necessitates a measured approach. Internally, we started by forming an AI Working Group to govern AI use cases across LogicGate and within the Risk Cloud platform. Timely revisions to our cyber and data security practices ensure we’re not putting customer data at risk or pushing AI-generated code into our product without human review.

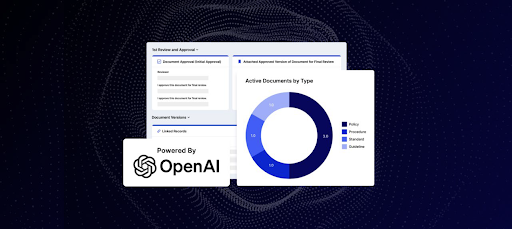

This foundation allowed our LogicGate product team to move quickly yet safely when developing our very first AI-Powered Risk Cloud Application, Policy & Procedure Management. During development, we had multiple, deep conversations about best practices with our executives, directors, and customers. Those conversations were crucial in informing our current approach to using AI at LogicGate. For example, we’ve decided to give Risk Cloud users full control over how and when their data is processed by AI. We’re doing this by ensuring that any features we build into Risk Cloud that interact with or rely on AI are opt-in.

With these initial guardrails in place, we were excited to continue pushing the boundaries of what’s possible in GRC with AI and finding innovative ways to leverage this exciting technology to help our customers solve their greatest risk and compliance challenges. This led to our very first AI Week Hackathon in the summer of 2023, where members of the LogicGate Product team spent four days brainstorming and creating our platform’s next AI-driven solutions.

The results of the Hackathon were nothing short of astounding. We answered questions such as “What if you could ask a chatbot to help you automatically fill out an IT security questionnaire?” and “Could you have AI recommend risk mitigations, generate board-ready ERM reports, or point out policy differences?” We discovered that those types of efficiency gains, and many others, are absolutely possible with AI.

Innovating Through Uncharted Waters: What’s Here, What’s Next

In addition to enhancing the Risk Cloud customer experience, LogicGate has invested in AI to drive productivity and efficiency in our own R&D organization. As a first step, we implemented Copilot across our Engineering organization and added a range of AI capabilities in Product and Security to boost team output and effectiveness. These same teams will continue to work hand-in-hand with our customers and partners to deliver safe, innovative AI-powered solutions to pressing GRC problems.

Here at LogicGate, we always say that the best companies aren’t built by avoiding risks, but taking the right ones. Artificial intelligence certainly presents its fair share of risks, but we believe that with the proper safeguards in place, it will revolutionize how organizations approach GRC. As we look to the future, it’s our mission to enable both ourselves and our customers to innovate quickly while staying safe with responsible AI features and governance solutions in Risk Cloud.