The pressure to leverage generative AI to enhance business performance is a critical priority for most companies today. How can we be more efficient? How can we innovate faster and disrupt the market? How can I reduce cost in my business? Faster, more, leaner, better…leaders are having to answer to the board and investors. Yes, adopting generative AI will help drive those results, but if it’s not adopted with proper governance for privacy and security, you could open your doors to bad actors through AI models. Leaders across IT, risk, security and compliance functions need to work together to implement a holistic approach to managing, scaling and harnessing AI adoption within their organizations to strengthen resilience.

According to McKinsey’s “The economic potential of generative AI: The next productivity frontier” research report, 63 percent of respondents characterize the implementation of gen AI as a “high” or “very high” priority; however, 91 percent of respondents don’t feel “very prepared” to do so in a responsible manner. Furthermore, the recent “OCEG Use of AI for GRC” research report found that 82 percent of respondents agree or strongly agree they must adopt generative AI, yet only 12 percent said their organization has a documented AI Governance plan. Adoption concerns are valid – security professionals continue to see an increase in cyber crimes and the average cost of a data breach in 2023 was a painful $4.45 Million. Is the risk worth the reward? The discrepancy is clear – businesses acknowledge they need to adopt AI technology, but a clear and confident path forward has not yet been paved.

AI Usage Under Your Nose

Lack of clarity and understanding can delay a company from implementing AI guidelines and guardrails, but as AI rapidly evolves, taking a lackadaisical approach may come with ramifications. For example, while you’re reading this, someone in your organization is most likely using a generative AI model to complete a task. At LogicGate, we say it’s not IF a cyber attack will occur, but rather WHEN it will occur – similarly with AI, it’s not IF your employees are using ChatGPT, CoPilot, Perplexity AI or other AI models, but rather WHEN will you find out. Your sales team may already be leveraging Zoho’s Zia, coders are becoming proficient in Python to build new generative AI Capabilities, and many other departments are seeking AI models that will make them more efficient and productive, driving value for the business. To be clear, using AI to be more resourceful and effective is a good thing; however, not governing and monitoring AI usage exposes your organization to threats and opportunistic bad actors. As a matter of risk, some of the world’s largest tech companies, including ones building LLMs, have banned GenAI, such as Samsung, after their engineers accidentally leaked internal source code by uploading it into ChatGPT. The point is that the AI generational wave has hit our shores, so learn how to swim, or you’ll be vulnerable to its undertow.

While historically GRC teams have been stigmatized as the “Department of No” and the department itself has been perceived as a cost center on budget sheets, that is no longer the case. GRC is a business enabler. In fact, the recent Forrester “Generative AI: What It Means For Governance, Risk, And Compliance” report proves GRC is a strategic muscle in business, and AI is helping drive a fundamental shift from protective and reactive measures to dynamic and predictive risk management. To fully reap the benefits of generative AI, businesses need to empower their GRC functions to approach adoption from a holistic lens. LogicGate’s expertise is in holistic GRC programming, which is why our new AI Governance Solution was purpose-built to help enterprises govern, manage and scale the implementation and usage of AI technology throughout the entirety of their organizations. You can’t protect what you don’t know you have, and a siloed, point-solution approach won’t be effective in reviewing, approving and managing AI usage enterprise-wide, nor will it have the flexibility when you need to update, pivot or scale your AI Governance program.

Why Holistic Governance Matters

Fundamentally, AI governance represents a paradigm shift in how businesses must approach AI. From the U.S. AI Bill of Rights to the EU AI Act, a strong focus on AI governance highlighting requirements around security, transparency and accountability is emerging. Complacency doesn’t exist in GRC, which means those functions must act swiftly and safely. This also means implementing robust processes and controls to proactively identify, assess and mitigate the unique risks AI can introduce across the entire AI lifecycle. Effective AI governance allows organizations to harness AI’s power while ensuring compliance with internal policies and external regulations. And AI governance does not just apply to employee and department usage – businesses also need transparency and governance of third-party AI usage. If you don’t have a structured way to evaluate how your vendors and partners are managing AI, you could be exposed to significant blind spots in your overall AI governance strategy and not have the visibility needed to identify or mitigate risks stemming from their AI usage.

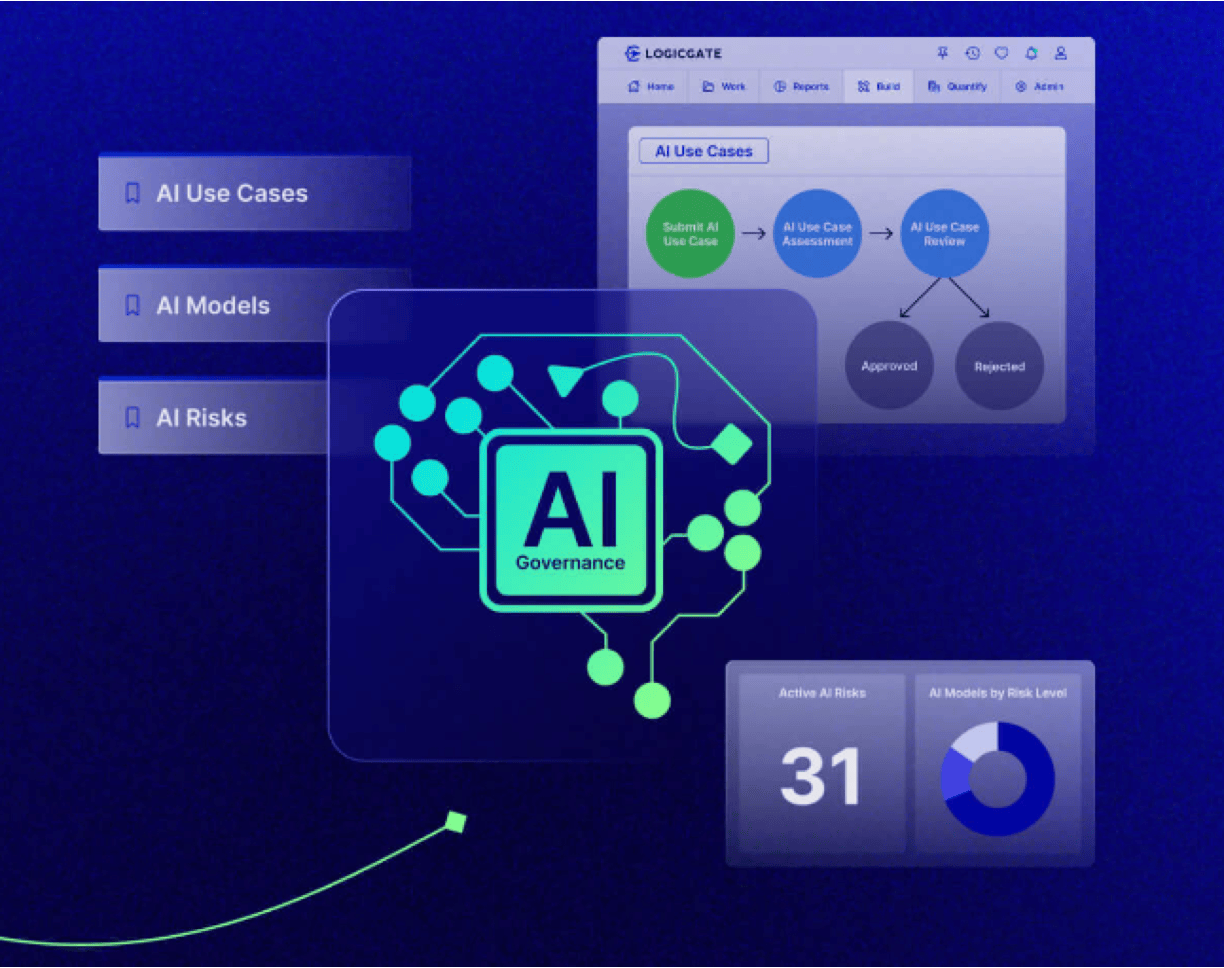

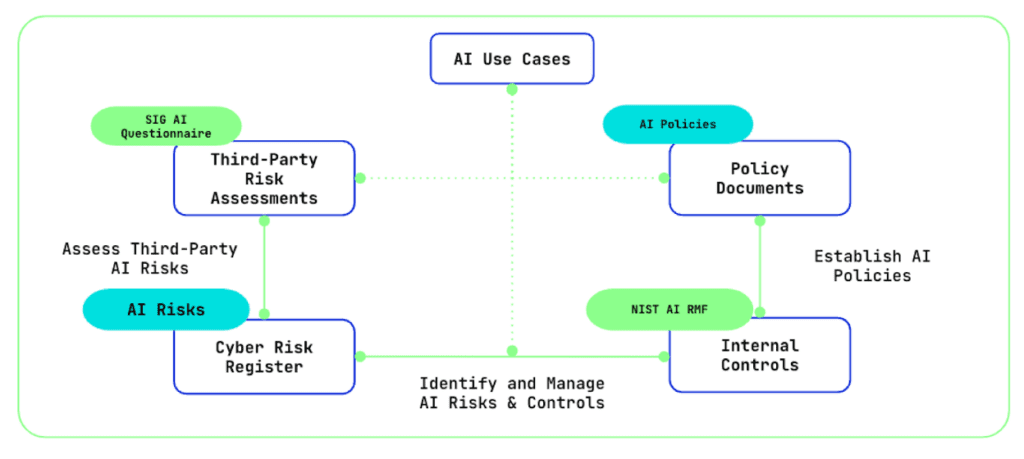

Our AI Governance Solution provides that much-needed holistic view, control and scalability that AI adoption requires. Within the solution our new AI Use Case Management Application seamlessly integrates with your cyber risk, controls compliance, third-party risk and policy management programs to embed AI governance and usage best practices into every business initiative. The Application streamlines and standardizes your organization’s internal use of AI through a centralized submission, review and approval process for every AI use case and model, and gives you the means to track attestations and enforce adherence to AI policies in Risk Cloud’s Policy & Procedure Management Application. Teams can identify and centralize AI risks inside their organization’s Cyber Risk Register and automate assessment, monitoring and mitigation workflows, evaluate AI-Specific Controls for best practices and control recommendations from the NIST AI Risk Management Framework (RMF) to stay ahead of emerging AI risks. Teams can also launch AI third-party risk questionnaires from Shared Assessments inside Risk Cloud’s Third-Party Risk Management: SIG Lite Application to help identify which vendors embed AI technologies into their solutions and what, if any, impact that has on the business.

AI is radically changing the way businesses operate, and, frankly, it’s shifting the habits and behaviors of society as a whole. We saw this jolt of change in the 80’s with the introduction of the PC, then the 90’s gave us the internet. The 2000’s put smartphones in our hands, and social media – for many – has a hold on that grip. The AI wave will be no different, so it’s imperative that companies take a proactive and protective approach to holistically governing AI to quickly and safely reap the benefits.